Is “AI for social good” a contradiction? Or is it possible that the moment we’re in is opening a window of opportunity to reset our passive relationship with digital technology?

Careless People

I began the holidays reading Careless People: A Cautionary Tale of Power, Greed, and Lost Idealism by New Zealander Sarah Wynn-Williams, gifted to me by a close friend who lives in Boston. Wynn-Williams chronicles her seven-year rise to become Facebook’s (now "Meta") Global Public Policy Director. While the book is a personal memoir, it’s also a dossier on a morally vacuous leadership culture that has spread throughout Big Tech by executives who move from one tech giant to another.

The broad themes of her story are well-reported, but it was helpful to get a first-person account of how the culture was shaped over the author’s years at Facebook – characterized by placating executive egos and prioritizing "growth at all costs" – to monopolize a single resource above all else: our attention.

One of several proof points provided by Wynn-Williams is Facebook’s pitch to advertisers about their algorithms’ ability to identify and target “moments when young people need a confidence boost”, through individual user behaviour that indicates anxiety, stress, and depression.

This type of culture seems to be alive and well at dominant AI companies like OpenAI. Last fall, they released "Sora," a generative AI video app capable of creating TikTok-like social media "slop" at an industrial scale. In their announcement, OpenAI acknowledges that problems of passive video consumption – including “doomscrolling, addiction, isolation, and RL [Reinforcement Learning]-sloptimized feeds” – were “top of mind”.

.png)

Demobilization Machines

Empirical evidence of the attention capture “matrix” that FAANG (Facebook, Amazon, Apple, Netflix and Google) companies have built, and the associated social harms, are tallied by organizations like the Center for Humane Technology. But it’s harder to grasp the impact on ourselves at the individual level.

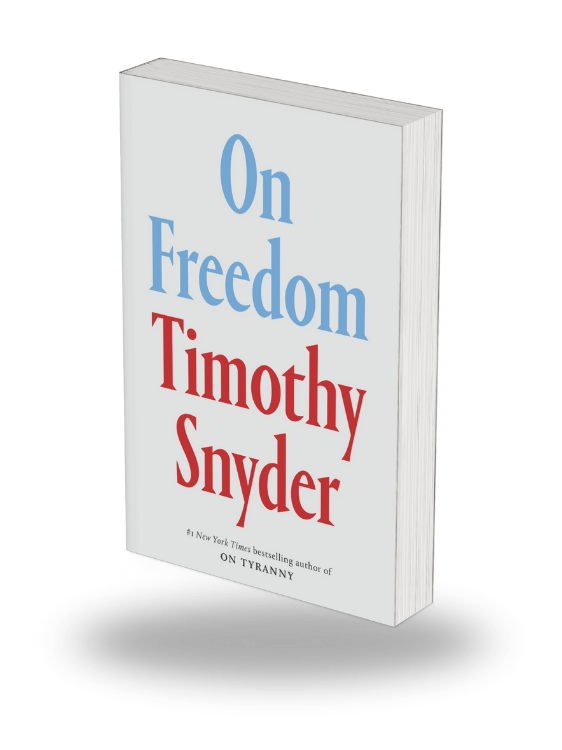

When the leader of the free world invading a sovereign country is just one of several major stories to pay attention to, is this really the moment to be worried about my “digital diet”? It turns out it might be, and revisiting On Freedom by historian Timothy Snyder was helpful in clarifying why.

He lays out five pillars of “positive” freedom, including unpredictability and factuality, that create the conditions for people to live and strive “for” something meaningful, rather than getting trapped in a continual cycle fighting against, or escaping from, their neighbours and the world. By making us more predictable, isolated, and separated from reality, we become easier to control, and Snyder is clear about the impact of passive use of digital technology on individuals:

"At first, the machine demobilizes. It takes up the time we need to attend to the bodies of others. It consumes energy and imagination. It tends to provide us with reasons not to act: not to exercise, not to make love, not to make friends, not to help our neighbor, not to vote, not to demonstrate. But at some point, when the algorithms locate our political fantasies, we find ourselves acting on the basis of unreality." – Timothy Snyder, On Freedom (page 107)

But, as I write this, I’m also aware of a shift in my relationship to and use of digital tools over the past year. I deleted Instagram and YouTube from my phone in September. I unsubscribed from Netflix in October. I put down the tablet to pick up physical books again. What changed?

AI Glitch in the Matrix

The release of ChatGPT in November 2022, and the flood of generative AI tools that followed, were shocking. The outputs were shocking, the range of utopian and apocalyptic futures being predicted were shocking, and the flagrant system failures and hallucinations were shocking.

The new tools seemed far from ready for prime time, but ChatGPT was setting records as the fastest-growing consumer application of all time.

Using generative AI felt different from day one and demanded a more vigilant posture. The chat interface mimicking human conversation sets up an expectation of truth telling. So when the technology fails to deliver on that and hallucinates, it exposes imperfections not just in the model, but in the company and engineers behind the curtain. It exposes a ‘glitch in the matrix’, and can make an otherwise passive user think differently about the tools.

The promise and existential risks sparked my interest and led me to start looking under the hood. In doing so, I discovered new digital capabilities and viable pathways to understand and develop skills as a builder of digital systems that would previously have required years learning to code and access to knowledge held tightly inside Big Tech's walled gardens.

From Passengers to Pilots

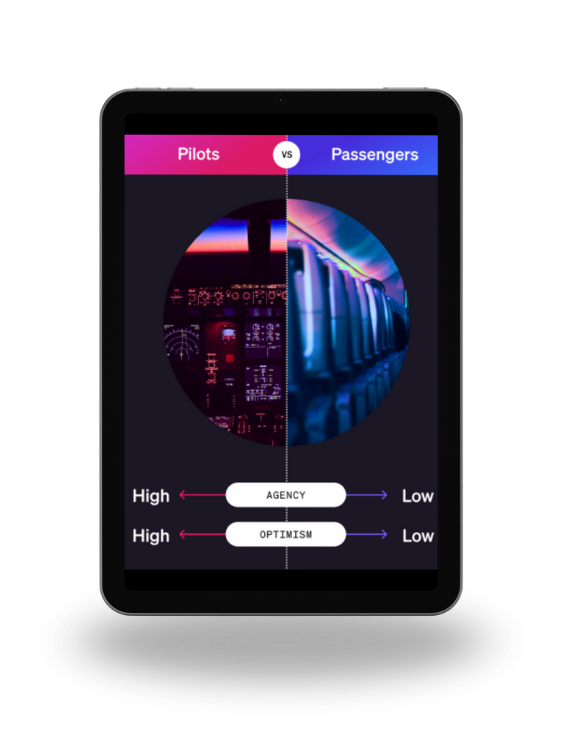

I’m encouraged by how many colleagues at work and in the community are experiencing similar shifts in their relationship to digital tech. A study of 12,000 workers across 18 industries by BetterUp and Stanford University’s Social Media Lab between 2023-2025 found that workers reaping the rewards of AI approached the technology with a high degree of agency:

"Our research identifies ‘Pilots’ – those with high agency and optimism – who use AI to boost creativity and impact, versus ‘Passengers’, who may have fear or reluctance towards AI and may only rely on it to cut corners." – Jeff Hancock, Director, Stanford Social Media Lab, and Professor at Stanford University (Source)

The same study found a significant incentive for organizations to invest and build agency through workplace coaching:

"At the heart of AI fluency are two coachable qualities: agency and optimism. These characteristics are best built in environments marked by psychological safety and strong, transparent leadership communication." – Alex Liebscher, Research Scientist, BetterUp (Source)

Building that capacity in the workplace is what’s motivating Groundforce Digital. We're running pilot projects through the studio, and offerteam sprint coaching to tackle complex challenges, design solutions, and build a culture that increases agency and impact for individual team members, and for the communities they serve.

.png)